Concurrency Limits

Concurrency Limits Overview

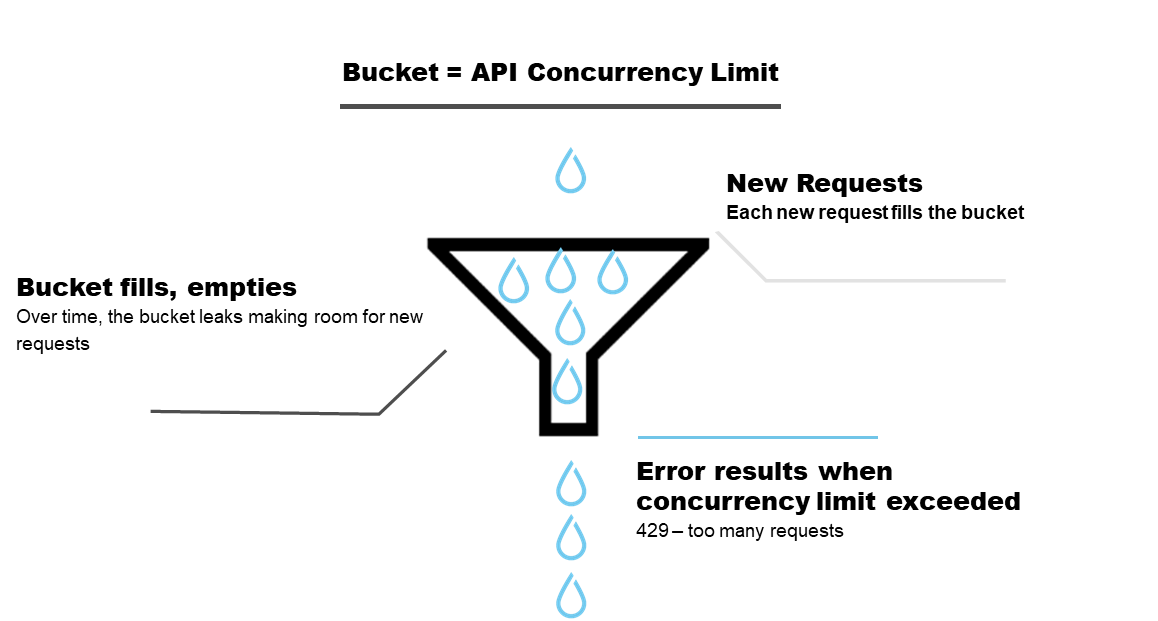

ICE Mortgage Technology enforces API concurrent rate limits, which is a limit on the number of simultaneous API calls that are made at a given time.

Each lender has a hard stop limit of concurrent API calls that is set for each Encompass environment. The default limit of 30 is set for each lender environment.

If the lender's hard stop limit of concurrent API calls is utilized, then all processes (partner and lender initiated calls) are not allowed.

Working with Concurrency Limits

The header of each API response has two concurrency key/value pairs including X-Concurrency-Limit-Limit and X-Concurrency-Limit-Remaining. This information tells the API consumer their allocated concurrency limit, along with the remaining concurrency at the given time.

For lenders and partners that, by the nature of their service and integration, are expected to make a large volume of calls to a lender’s instance (event based services, downloading and uploading large volumes of attachments, use of threading and parallel processing, etc.) it is important to design the integration in a way that ensures that the API caller is able to limit and configure the number of concurrent calls made to a lender’s environment. If the lender's hard stop limit of concurrent API calls is utilized, then all processes (partner and lender initiated calls) are not allowed, a 429 - Too Many Requests status code is returned. At this point, the lender or any Integration Applications cease to execute. The 429 - Too Many Requests status code will continue to be returned until the concurrency remaining rate is greater then 0.

Best practice is to use an exponential back-off in concurrency violations (the concurrency limit has been reached) and proactive metering (the available concurrency is outside an acceptable range) and not to exceed the remaining ratio limits as referenced below.

The header of each API response has two concurrency key/value pairs: X-Concurrency-Limit-Limit and X-Concurrency-Limit-Remaining. The Integration Application can use these to calculate the ratio when metering the available concurrency limit.

Use the following formula to calculate the remaining ratio:

(X-Concurrency-Limit-Limit - X-Concurrency-Limit-Remaining) / X-Concurrency-Limit-Limit = Remaining Ratio

For Lenders - The Remaining Ratio should not exceed 80% of the allowed concurrency limit.

Examples:

- Over Utilization of Rate Limit: (30 - 2) / 30 = 93%

- Correct Utilization of Rate Limit: (30 - 20) / 30 = 33%

For ISV Partners - The Remaining Ratio should not exceed 20% of the allowed concurrency limit.

Examples:

- Over Utilization of Rate Limit: (30 - 15) / 30 = 50%

- Correct Utilization of Rate Limit: (30 - 25) / 30 = 17%

If this remaining ratio is above the recommended ratio, the Integration Application will need to incorporate a proactive metering process to increase the X-Concurrency-Limit-Remaining. The ratio can also be a configuration in your Integration Application.

Best Practices for Efficient Use of API Concurrency

The best way to avoid being throttled is to reduce the number of simultaneous requests, this can normally be achieved through code optimization.

Consider the following practices when designing your app/integration:

- Cache frequently used data rather than fetching it repeatedly

- Batch requests when processing webhook notifications

- Eliminate unnecessary and redundant API requests

- Use a message queue to regulate call frequency

- Implement an exponential back-off to gracefully recover from throttle errors

- Be cautious with timeouts – concurrency is a factor of how long requests take to execute, not how long your app waits for responses

- Use metadata, included with API responses, to meter and prioritize requests

- Reduce latency by initiating requests as a super administrator – fewer business rules are applied with this type of account

Are you using the right automation for your use case?

- Webhooks, List APIs, Batch Calls - choose the right tool for your use case

- Use webhook events to listen for changes instead of polling via GET calls

- Get multiple attachments in a single call

- Leverage the Pipeline API to avoid multiple requests to retrieve loans

- Take advantage of Enhanced Field Change Events (GA in 24.2) which ALREADY includes the changed data

Options Available to Increase Limit

For clients requiring an increase in API concurrency limits (e.g. those receiving 429 error responses or anticipate heavy API use), options to increase API concurrency limits are available and may be adjusted as needed.

Please contact your ICE Mortgage Technology relationship manager or support team to discuss concurrency limits and pricing.

Updated over 1 year ago